Modelling temperature variation on distant stars

New research is helping to explain one of the big questions that has perplexed astrophysicists for the past 30 years - what causes the changing brightness of distant stars called magnetars.

Magnetars were formed from stellar explosions or supernovae and they have extremely strong magnetic fields, estimated to be around 100 million, million times greater than the magnetic field found on earth.

The magnetic field on each magnetar generates intense heat and x-rays. It is so strong it affects the physical properties of matter, most notably the way that heat is conducted through the crust of the star and across its surface, creating the variations in brightness which has puzzled astrophysicists and astronomers.

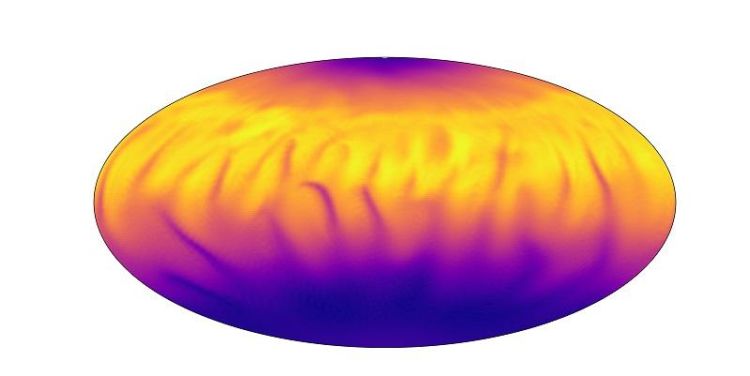

A team of scientists - led by Dr Andrei Igoshev at the University of Leeds – has developed a mathematical model that simulates the way the magnetic field disrupts the conventional understanding of heat being distributed uniformly which results in hotter and cooler regions where there may be a difference in temperature of one million degrees Celsius.

Those hotter and cooler regions emit x-rays of differing intensity – and it is that variation in x-ray intensity that is observed as changing brightness by space-borne telescopes.

The findings - Strong toroidal magnetic fields required by quiescent X-ray emission of magnetars - have been published today in the journal Nature Astronomy. The research was funded by the Science and Technology Facilities Council (STFC).

Dr Igoshev, from the School of Mathematics at Leeds, said: “We see this constant pattern of hot and cold regions. Our model – based on the physics of magnetic fields and the physics of heat - predicts the size, location and temperature of these regions - and in doing so, helps explain the data captured by satellite telescopes over several decades and which has left astronomers scratching their heads as to why the brightness of magnetars seemed to vary.

“Our research involved formulating mathematical equations that describe how the physics of magnetic fields and heat distribution would behave under the extreme conditions that exist on these stars.

“To formulate those equations took time but was straightforward. The big challenge was writing the computer code to solve the equations - that took more than three years.”

Once the code was written, it then took a super-computer to solve the equations, allowing the scientists to develop their predictive model.

The team used the STFC-funded DiRAC supercomputing facilities at the University of Leicester.

Dr Igoshev said once the model had been developed, its predictions were tested against the data collected by space-borne observatories. The model was correct in ten out of 19 cases.

The magnetars studied as part of the investigation are in the Milky Way and typically 15 thousand light years away.

The other members of the research team were Professor Rainer Hollerbach, also from Leeds, Dr Toby Wood, from the University of Newcastle, and Dr Konstantinos N Gourgouliatos, from the University of Patras in Greece.

The image shows the cooler (blue) and hotter regions (yellow) on a magnetar. The source data came from magnetars: 4U 0142+61, 1E 1547.0-5408, XTE J1810–197, SGR 1900 + 14.

Further information

For further details, please contact David Lewis in the press office: d.lewis@leeds.ac.uk